C-Miner: Mining Block Correlations in Storage Systems on FAST 2014.

Storage Software access data based on the semantic patterns. This semantic link is referred to as block correlations.

For example, in a database that uses index trees such as B-trees to speed up query performance, a tree node is correlated to its parent node and its ancestor nodes. Similarly, in a file server backend storage system, a file block is correlated to its inode block.

Unfortunately, information about block correlations are unavailable at a storage system because a storage system exports only block interfaces.

This semantic link also widely emerged in Graph, as discussed in FlashGraph. Because the semantic links in graph are so dominant, the I/O patterns of graph analysis applications are mainly consist of random I/Os. The random I/Os throughput of SSDs today is only two or three times less than their sequential I/O. This might be because of the cache hit ratio of mapping table look up performance for random I/O is very low, and each I/O will cause one mapping page read and one data page read.

This paper propose a practical black box approach to mining the block correlations in storage systems.

In the first step, the preprocessing use non-overlapped cutting to splits the access stream into access sequences of equal size. The performance of overlapping cutting would be better. However, most frequent subsequences would still be considered frequent after non-overlapped cutting.

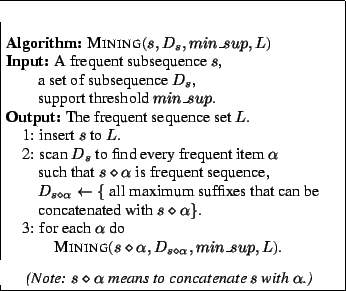

The second step is mining the frequency sequences. The algorithm to mine the frequency sequences is an iterative algorithm 1. The algorithm makes the assumption that if a sequence is frequent, then all of its subsequences are frequent. So, the algorithm first search the frequent subsequences with length 1, and then with length 2 and so on.

The third step is generating rules from frequent sequences, e.g. if block a is accessed then block c will be accessed. Each rules has an confidence to measure the probable of truth estimation.

The rules generated for real world traces are very small. For 3 days Cello-92 trace, there are 228k rules generated in 7800s (2.1h) with a size of 3.1MB. The rules for a give trace is very stable. So we don’t need to generate new rules constantly.